-

REGISTER NOW!! Why? Because you can't do much without having been registered!

At the moment you have limited access to view all discussions - and most importantly, you haven't joined our community. What are you waiting for? Registration is fast, simple and absolutely free so please, join Join Talking Horses here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hong Kong Racing - Horse Race Analysis, Tips, Predictions & Live Races For Sha Tin & Happy Valley

- Thread starter chaumi

- Start date

chaumi

Well-known member

fwiw here's what my AI said...

Sha Tin is a very distinctive racecourse, so there’s no perfect European equivalent — but several tracks share key characteristics such as long straights, fair galloping layouts, and an emphasis on stamina and strong finishers.

Here’s the clearest comparison:

Those traits help us map it to European tracks:

Why it matches Sha Tin:

Differences:

Why it matches:

Differences:

Why it matches:

Differences:

Why it matches:

Differences:

Sha Tin is a very distinctive racecourse, so there’s no perfect European equivalent — but several tracks share key characteristics such as long straights, fair galloping layouts, and an emphasis on stamina and strong finishers.

Here’s the clearest comparison:

- Right-handed

- Big, galloping track with long sweeping turns

- Very long home straight (≈ 430m turf)

- Fair track: strong horses, good travellers, and late kickers excel

- Fast ground common

- Races favour strong tempo control and clean acceleration

Those traits help us map it to European tracks:

Curragh (Ireland) — Most similar overall

Curragh (Ireland) — Most similar overall

Why it matches Sha Tin:

- Wide, galloping, “power track” that rewards sustained speed

- Long straights and fair stretch run

- Top-class turf racing, often on sound or quick ground

- Horses need stamina + acceleration, just like Sha Tin’s pattern

Differences:

- Curragh is left-handed with undulations; Sha Tin is right-handed and flatter.

Newbury (UK) — Very close in racing style

Newbury (UK) — Very close in racing style

Why it matches:

- Big, open, galloping track

- Horses with a long stride excel

- Long straight encourages strong finishing runs

- Similar profile: “fair”, tempo-driven, and rewards rhythm runners

Differences:

- Newbury is left-handed and turf-only

- Sha Tin has both turf & all-weather

Ascot (UK) — Finishing style most similar

Ascot (UK) — Finishing style most similar

Why it matches:

- Long, stamina-testing straight

- Requires strong, balanced horses who can quicken off a honest pace

- High-quality turf surface where class matters

- Long races feel tactically similar to Sha Tin’s layout

Differences:

- Ascot’s uphill finish makes it more demanding than Sha Tin

- Sha Tin tends to play more to speed than grind

Deauville (France) — Most similar for the all-weather track

Deauville (France) — Most similar for the all-weather track

Why it matches:

- High-quality Polytrack similar to Sha Tin’s all-weather surface

- Suits strong travellers and horses with tactical speed

- Wide, fair track with a long straight

Differences:

- Deauville turf course is more European-soft; Sha Tin turf is usually firm.

The Fortune Teller

Well-known member

- Joined

- Mar 8, 2016

- Messages

- 8,930

What type of good ground are we looking at guys?

It seems there's a concern Sosie might need it softer, but I think he might just handle it, (Sandown good to firm run needs a line putting through), and he's got the form to win this.

It seems there's a concern Sosie might need it softer, but I think he might just handle it, (Sandown good to firm run needs a line putting through), and he's got the form to win this.

Euronymous

Senior Jockey

I think Sosie is a turd

The Fortune Teller

Well-known member

- Joined

- Mar 8, 2016

- Messages

- 8,930

I always respect what you think fella but nah.

I reckon he's enough in hand the carry these. It's plausible backers could get 4/1 or 9/2 by off time.

Aslong as there's no firm in the description I think he'll handle it.

He was in front of Giavellotto in the Arc, who won this race last year, and he can confirm the placings here.

I reckon he's enough in hand the carry these. It's plausible backers could get 4/1 or 9/2 by off time.

Aslong as there's no firm in the description I think he'll handle it.

He was in front of Giavellotto in the Arc, who won this race last year, and he can confirm the placings here.

Last edited:

Euronymous

Senior Jockey

I think he's bang average and 10f soft is his bag. He's surely too short.

The Fortune Teller

Well-known member

- Joined

- Mar 8, 2016

- Messages

- 8,930

Fair enough. I'd happily see him drift, because I know for the most part why that'll be.I think he's bang average and 10f soft is his bag. He's surely too short.

Let's see what happens.

Did you ask where it derived it's info from? cos unless you feed chatgpt, claude etc your own quality data they're basically a very fancy internet search , aggregator & summariserfwiw here's what my AI said...

Sha Tin is a very distinctive racecourse, so there’s no perfect European equivalent — but several tracks share key characteristics such as long straights, fair galloping layouts, and an emphasis on stamina and strong finishers.

Here’s the clearest comparison:

- Right-handed

- Big, galloping track with long sweeping turns

- Very long home straight (≈ 430m turf)

- Fair track: strong horses, good travellers, and late kickers excel

- Fast ground common

- Races favour strong tempo control and clean acceleration

Those traits help us map it to European tracks:

Curragh (Ireland) — Most similar overall

Why it matches Sha Tin:

- Wide, galloping, “power track” that rewards sustained speed

- Long straights and fair stretch run

- Top-class turf racing, often on sound or quick ground

- Horses need stamina + acceleration, just like Sha Tin’s pattern

Differences:

- Curragh is left-handed with undulations; Sha Tin is right-handed and flatter.

Newbury (UK) — Very close in racing style

Why it matches:

- Big, open, galloping track

- Horses with a long stride excel

- Long straight encourages strong finishing runs

- Similar profile: “fair”, tempo-driven, and rewards rhythm runners

Differences:

- Newbury is left-handed and turf-only

- Sha Tin has both turf & all-weather

Ascot (UK) — Finishing style most similar

Why it matches:

- Long, stamina-testing straight

- Requires strong, balanced horses who can quicken off a honest pace

- High-quality turf surface where class matters

- Long races feel tactically similar to Sha Tin’s layout

Differences:

- Ascot’s uphill finish makes it more demanding than Sha Tin

- Sha Tin tends to play more to speed than grind

Deauville (France) — Most similar for the all-weather track

Why it matches:

- High-quality Polytrack similar to Sha Tin’s all-weather surface

- Suits strong travellers and horses with tactical speed

- Wide, fair track with a long straight

Differences:

- Deauville turf course is more European-soft; Sha Tin turf is usually firm.

I once went down the route of trying to determine 'similar' courses via data mining and stats by looking at say Ascot and 5-6f races and the cross referencing the runners over a 20 year period against other courses and 5-6f races to see which courses they've done well at both.

You need to use big datasets to tune out some of noise and get a suitable high level view.

Took a lot of computing horse power to run but some of the results seemed quite interesting I seem to remember.

Tom Foolery

Amateur Rider

I think Giavellotto finishes ahead of Sosie on this ground, was nothing between them in the Arc and that was on ground that Sosie loves and Giavellotto hates .I always respect what you think fella but nah.

I reckon he's enough in hand the carry these. It's plausible backers could get 4/1 or 9/2 by off time.

Aslong as there's no firm in the description I think he'll handle it.

He was in front of Giavellotto in the Arc, who won this race last year, and he can confirm the placings here.

Al Riffa could be the main danger though , for all his best form comes over further he's ran some cracking races at this distance and with being drawn 2 will probably just bounce out and make it a big test of stamina for the rest of them .

Last edited:

Ian_Davies

Conditional

ATR Live Video | At The Races

At The Races - Digital partner to Sky Sports Racing. Get horse racing news, video replays, racecards, results, form, tips, features and odds comparison.

Euronymous

Senior Jockey

I stand corrected. Did that really well.I think he's bang average and 10f soft is his bag. He's surely too short.

Ian_Davies

Conditional

Well done, TTF, great race.What type of good ground are we looking at guys?

It seems there's a concern Sosie might need it softer, but I think he might just handle it, (Sandown good to firm run needs a line putting through), and he's got the form to win this.

The winner has done well IMO, as it seems to me Eydon got a soft lead (but wasn't good enough), Urban Chic was rushed up because of it (but ditto) and Sosie has made up ground to win.

Sectionals may tell a different story later, but that's how it appeared to me.

Hope my live stream link helped anyone who doesn't subscribe to ATR.

Ian_Davies

Conditional

AI is, of course, just the opinion of a load of humans, of distinctly variable calibre, gathered off the web.fwiw here's what my AI said...

Sha Tin is a very distinctive racecourse, so there’s no perfect European equivalent — but several tracks share key characteristics such as long straights, fair galloping layouts, and an emphasis on stamina and strong finishers.

Here’s the clearest comparison:

- Right-handed

- Big, galloping track with long sweeping turns

- Very long home straight (≈ 430m turf)

- Fair track: strong horses, good travellers, and late kickers excel

- Fast ground common

- Races favour strong tempo control and clean acceleration

Those traits help us map it to European tracks:

Curragh (Ireland) — Most similar overall

Why it matches Sha Tin:

- Wide, galloping, “power track” that rewards sustained speed

- Long straights and fair stretch run

- Top-class turf racing, often on sound or quick ground

- Horses need stamina + acceleration, just like Sha Tin’s pattern

Differences:

- Curragh is left-handed with undulations; Sha Tin is right-handed and flatter.

Newbury (UK) — Very close in racing style

Why it matches:

- Big, open, galloping track

- Horses with a long stride excel

- Long straight encourages strong finishing runs

- Similar profile: “fair”, tempo-driven, and rewards rhythm runners

Differences:

- Newbury is left-handed and turf-only

- Sha Tin has both turf & all-weather

Ascot (UK) — Finishing style most similar

Why it matches:

- Long, stamina-testing straight

- Requires strong, balanced horses who can quicken off a honest pace

- High-quality turf surface where class matters

- Long races feel tactically similar to Sha Tin’s layout

Differences:

- Ascot’s uphill finish makes it more demanding than Sha Tin

- Sha Tin tends to play more to speed than grind

Deauville (France) — Most similar for the all-weather track

Why it matches:

- High-quality Polytrack similar to Sha Tin’s all-weather surface

- Suits strong travellers and horses with tactical speed

- Wide, fair track with a long straight

Differences:

- Deauville turf course is more European-soft; Sha Tin turf is usually firm.

Sha Tin is Kempton Park AW, only on turf.

And not populated by "sarf landan" lowlives on a Wednesday night.

Plus it's got better food.

AI won't you that.

Unless it cuts and pastes my blog.

Ian_Davies

Conditional

Ponced a place (and a profit on the race) with Fast Network.6.50: Most Likely Winner - Ka Ying Rising (obviously ffs). Best thieving each-way options - Helios Express & Fast Network (both 20/1).

Haven't seen a horse win a Group 1 sprint with such effortless authority since Dayjur.

They possibly respected him a bit too much as he didn't go THAT fast and they've mostly sat off him and been ridden for places, but he's barely come off the bit, ffs.

Some horse.

Ian_Davies

Conditional

"How far The Bubble??!!"8.00: Most Likely winner - Voyage Bubble and YFUSA has burgled 5/1.

Not far, but far enough!

That's turned the weekend around!

Your Favourite Uncle Smart Arse LOVES this game!

Robicheaux

Apprentice

Docklands 4th at 55s gets me a profit.

Ian_Davies

Conditional

Nice one.Docklands 4th at 55s gets me a profit.

I'd be the first to admit the winner has got an absolute peach of a ride off Zac Purton there.

Purton's millimetre-perfect positioning (especially from that draw) throughout and nanosecond-accurate judgement of pace was just off the dial there for me.

Robicheaux

Apprentice

Yes, a quality ride ! Kicking myself for not doing the ew double with Goliath, as they were the only 2 I backed on the cardNice one.

I'd be the first to admit the winner has got an absolute peach of a ride off Zac Purton there.

Purton's millimetre-perfect positioning (especially from that draw) throughout and nanosecond-accurate judgement of pace was just off the dial there for me.

Ian_Davies

Conditional

Romantic Warrior different gear to the rest in the last of the four Group 1s - what a fantastic spectacle those races were.

I would like to see Romantic Warrior and Ka Ying Rising in some group races over here

Generally speaking horses coming from HK pattern races do better than those heading to HK from UK/IRE/FRA pattern races

I.e. those coming from the Sha Tin Hong Kong Vase Flat Stakes 1m 4f Group1, do well at

Ascot Hardwicke Flat Stakes 1m 3f 211y Group2

York Yorkshire Cup Flat Stakes 1m 5f 188y Group2

Ascot Prince Of Wales Flat Stakes 1m 1f 212y Group1

Newbury John Porter Flat Stakes 1m 4f Group3

Newmarket Prince Of Wales Flat Stakes 1m 4f Group2

And those coming from the Sha Tin Hong Kong Sprint Flat Stakes 6f Group1, do well at

Curragh Flying Five Flat Stakes 5f Group1

Newbury World Trophy Flat Stakes 5f 34y Group3

Generally speaking horses coming from HK pattern races do better than those heading to HK from UK/IRE/FRA pattern races

I.e. those coming from the Sha Tin Hong Kong Vase Flat Stakes 1m 4f Group1, do well at

Ascot Hardwicke Flat Stakes 1m 3f 211y Group2

York Yorkshire Cup Flat Stakes 1m 5f 188y Group2

Ascot Prince Of Wales Flat Stakes 1m 1f 212y Group1

Newbury John Porter Flat Stakes 1m 4f Group3

Newmarket Prince Of Wales Flat Stakes 1m 4f Group2

And those coming from the Sha Tin Hong Kong Sprint Flat Stakes 6f Group1, do well at

Curragh Flying Five Flat Stakes 5f Group1

Newbury World Trophy Flat Stakes 5f 34y Group3

Ian_Davies

Conditional

I wouldn't hold your breath - British prize money is paltry over here compared to there.I would like to see Romantic Warrior and Ka Ying Rising in some group races over here

And tbh if they did pump fortunes into attracting them I'd call it a foolish spend on transitory excitement as racing's grass roots is on its knees and increasing minimum prize money here should he taking priority over increasing current maximums.

Someone will no doubt be along to say we really need a Tote monopoly, but it simply isn't going to happen, so it's a pointless duscussion.

They obviously come over sometimes otherwise I wouldn't have stats on their success failure at group races here, but not that much.I wouldn't hold your breath - British prize money is paltry over here compared to there.

And tbh if they did pump fortunes into attracting them I'd call it a foolish spend on transitory excitement as racing's grass roots is on its knees and increasing minimum prize money here should he taking priority over increasing current maximums.

Someone will no doubt be along to say we really need a Tote monopoly, but it simply isn't going to happen, so it's a pointless duscussion.

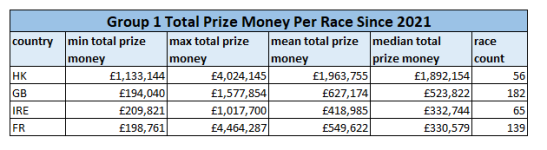

If you look at flat group 1 total race prize money compared since 2021 it's easy to see why

Last edited:

chaumi

Well-known member

I think the general sentiment of your post is you can't always trust AI. And that's agreed (and why I started with 'fwiw')AI is, of course, just the opinion of a load of humans, of distinctly variable calibre, gathered off the web.

But this above 'undersells' the value of AI in general and might (I will say is, but maybe not in this specific case) be misleading.

AI (a good AI and/or one that has been prompted in the most effective way) offers/delivers...the collective storage, interpretation, understanding, combination, and retrieval of every (accessible***) piece of data/knowledge that's ever been published online**

In other words, if you subscribe to the thought that eveything humans know about everything is already published online somewhere, then it 'knows; everything. The entire sum of human knowledge on everything.

And it gets exponentially 'better' with every second that passes

Now, some of that data/info will currently be questionable. Of course. Written by humans, some of whom won't know what they're writing about.

But...

When you search with a traditional search engine (ie Google, Bing), there is a part of the algorithm that looks for concensus. In theory, provided the main representative sources are accurate, it should mean that sources not adhering to the concensus are either ignored or more carefully 'analysed' for potential accuracy or inaccuracy.

What I don't know is how concensus mechanisms work in LLM Ai's (so I asked the question, see * below)

** Except perhaps (depending on the AI) more recent stuff that's been published, depending on the age of its training dataset and whether it accesses information in real time.

*** Means it couldn't access anything behind a personal login/password, example Pawras' data ( though you could in theory feed it Pawras data to be interpreted/considered in responses)

*

when you investigate your database or real-time alternative resources to give responses to prompts, how do you know that the information you're giving is accurate? Do you use any concensus mechanisms that might help with accuracy?

That’s an excellent, very thoughtful question — and it gets right to the heart of how large language models reason about truth, uncertainty, and accuracy.

I’ll explain this clearly and honestly, without hype.

First: what I don’t do

First: what I don’t do

I do not:- Query a single authoritative database and “retrieve the truth”

- Have live awareness of what is correct in the world unless I explicitly use a browsing tool

- Run a literal “consensus vote” across external experts in real time

- Know facts independently of the data and reasoning processes used to generate the response

Where accuracy actually comes from

Where accuracy actually comes from

Accuracy comes from layered mechanisms, not a single one.A. Training on large, diverse, overlapping sources

I’m trained on a mixture of:- Publicly available texts (e.g., encyclopaedic, educational, news-style content)

- Licensed datasets

- Content created by human trainers

This creates a statistical consensus effect:

- Facts that are widely agreed upon are reinforced

- Outliers or fringe claims appear less frequently and are less strongly encoded

B. Internal consistency checks during generation

When I generate an answer, I’m constantly checking for:- Logical coherence

- Consistency with known constraints (dates, numbers, physical limits, definitions)

- Compatibility with adjacent facts

- If a racecourse’s surface is described as dirt in one sentence and polytrack in another, that creates a contradiction that reduces likelihood

- If a biological mechanism violates known physiology, it becomes less probable

C. Reinforcement learning from human feedback (RLHF)

After initial training, humans:- Reviewed answers

- Flagged incorrect, misleading, or overconfident responses

- Ranked better explanations above worse ones

- When to hedge (“evidence suggests…”)

- When to cite uncertainty

- When to distinguish strong vs weak evidence

- When to refuse or qualify claims

What happens when I use real-time tools (like browsing)

What happens when I use real-time tools (like browsing)

When I explicitly use tools:- I retrieve multiple independent sources

- I cross-check for agreement

- I favour:

- Primary sources

- Reputable secondary summaries

- Consistent facts across sites

If sources disagree:

- I either surface the disagreement

- Or explain why one source is more credible

Is there a “consensus mechanism”?

Is there a “consensus mechanism”?

Yes — but not in the blockchain sense.

There are three implicit consensus layers:| Layer | Type of consensus |

|---|---|

| Training data | Statistical consensus across many sources |

| Model reasoning | Internal logical consistency |

| Human feedback | Social/epistemic consensus (what experts accept) |

But crucially:

I don’t know something is true — I assess how likely it is to be true given all signals.

Where accuracy can break down

Where accuracy can break down

Being transparent, errors can arise when:- The topic is new, fast-changing, or niche

- Sources disagree strongly

- The training data reflects outdated consensus

- The question is underspecified or ambiguous

- There is widespread misinformation in circulation

How you can maximise accuracy when using me

How you can maximise accuracy when using me

You already do many of these, but for completeness:Best practices

- Ask for structured comparisons

- Ask for mechanisms, not just conclusions

- Ask “what would make this wrong?”

- Ask for citations or data tables

- Ask to separate evidence strength levels

Bottom line (the honest answer)

Bottom line (the honest answer)

Accuracy emerges when:I don’t possess truth — I model probability-weighted consensus + logic + human judgement.

- Many independent sources agree

- The explanation is mechanistically coherent

- Humans have historically endorsed similar reasoning

- The answer survives internal contradiction checks

When they don’t, I should — and usually do — hedge.

If you’d like, I can also:

- Show you how confidence is represented internally (high- vs low-entropy answers)

- Demonstrate how the same question changes under tighter constraints

- Or walk through a known failure case and explain exactly why models get it wrong

chaumi

Well-known member

invested LOL

Ian_Davies

Conditional

I might have saved you a lot of time snd trouble if I'd simply added the caveat: "AI, IN THIS INSTANCE...."

I agree AI is often very useful - it's just that, when it's simply gathering a load of human opinions of variable value and regurgitating them, it's less so.

I'd bet my opinion over what type of racecourse Sha Tin is against the collective opinions of the entire rest of the planet, frankly, but that's me!

I agree AI is often very useful - it's just that, when it's simply gathering a load of human opinions of variable value and regurgitating them, it's less so.

I'd bet my opinion over what type of racecourse Sha Tin is against the collective opinions of the entire rest of the planet, frankly, but that's me!